What is our planned approach to the grammar of sign language?

.jpg)

Is the grammar of signed languages the same as spoken languages?

The answer is a clear no! Many people assume that sign languages are just visual translations of spoken languages. However, sign languages are complex, fully developed linguistic systems with their own grammar, syntax and cultural nuances. This difference is important for linguistics, teachers and anyone interested in clear communication in different settings.

For example, in British Sign Language (BSL), a single gesture can change meaning depending on the facial expression, movement and handshape. As technology progresses, understanding the nuances of sign languages becomes increasingly important for developers of communication tools like us at Signapse, an AI technology translation aimed at serving Deaf and hard-of-hearing communities.

Non-manual signals, like facial expressions and eyebrow positioning, are crucial in sign languages. In American Sign Language (ASL), for example, raising eyebrows indicates a yes/no question, while furrowing them indicates a more specific inquiry that begins with words like “who”, “what”, or “where”. The direction verb is also a key feature in sign languages, as it clarifies the roles of different participants in a spatial space. These rich visual elements allow sign languages to convey complex meanings and showcase their nuanced nature.

When examining the grammatical structures of sign languages, we find that this reveals clear differences from spoken languages. Spoken languages like English typically follow a Subject-Verb-Object (SVO) structure, as seen in the statement, “I eat an apple”. In contrast, sign languages commonly use a Topic-Comment structure. In BSL, for instance, "APPLE I EAT" places the topic first. Time markers in sign languages also come at the beginning of sentences, such as "TOMORROW MEET YOU" instead of "I will meet you tomorrow."

Sign languages tend to be more concise than spoken languages, often omitting auxiliary verbs like "is" or "will." Overall, sign languages use visual, spatial, and non-manual elements to convey meaning, making their grammar different from spoken languages.

What is the history of sign language ?

The history of sign languages is expansive and complex, with origins tracing back 30,000 to 35,000 years ago (Emilio Ferreiro, 2023). Over time, sign languages have diversified into more than 300 sign languages, each shaped by distinct historical and cultural influences and parallels spoken languages, with regional variations influenced by geopolitical and social factors. Early signs were likely visual representations of objects and actions, forming the basis of today’s Sign Languages.

One significant historical influence on the development of sign languages is the rise of empires and colonial powers. For example, French Sign Language (LSF) has impacted many sign languages worldwide due to France’s historical presence in various regions. Elements of LSF, such as its one-handed finger-spelling system, have been widely adopted, highlighting the interconnections of communication. Today, elements of LSF are present in more than a third of the world's sign languages, reflecting France's cultural and linguistic influence during its colonial era.

In Australia, Auslan, the national sign language, has roots in British Sign Language due to historical ties between the two countries. However, Auslan has also developed its own grammatical rules and vocabulary, reflecting Australia’s evolving national identity.

What role does culture play ?

Culture plays a crucial role in shaping communication, and sign languages are key expressions of cultural identity, particularly within Deaf communities. Far from being simple gestures, sign languages are sophisticated, evolving systems that reflect the unique ways people express themselves and interact. They are influenced by history, geography, and societal shifts, making them dynamic and diverse.

For example, in American Sign Language (ASL), touching the chin conveys "THANK YOU," a symbol of gratitude. However, the same gesture might carry a different meaning in other cultures, such as humility or rejecting praise, highlighting the importance of cultural context. Similarly, British Sign Language (BSL) varies across regions—what’s used for "coffee" in one area may differ in another. These regional differences illustrate how languages adapt and grow based on the traditions and communities they serve.

What is our plan ?

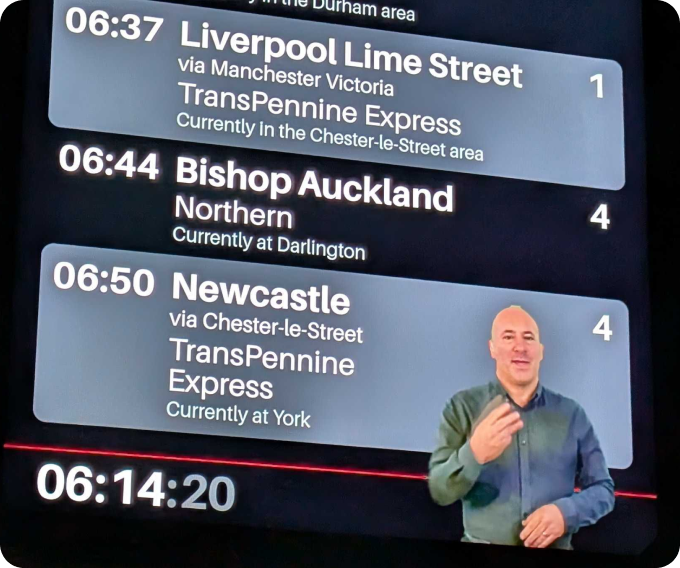

Technology, such as Signapse, will be an important tool in preserving the depth and diversity of sign languages. Signapse's AI technology doesn't just translate isolated gestures but goes deeper, capturing the emotions and intentions behind each sign. We collaborate with Deaf interpreters and linguists to maintain sign languages' authenticity and cultural richness. Using AI to analyse and document these signs, Signapse helps ensure that sign languages continue evolving while respecting their historical context and cultural significance.

At Signapse, we believe in transparency and collaboration. As we develop our AI-powered BSL translation technology, we aim to set an industry standard by openly sharing the stages of our journey. This helps communicate the current state of the technology and its future potential.

We recognise that there’s no fixed threshold for releasing a product. Instead, by framing our progress as a process with defined stages, we aim to foster trust, educate stakeholders, and reassure users about the direction and reliability of our innovations.

This is the plan for the next 12 months.

BSL Translation Stages

Stage 1: Foundational. Jan 2025: Currently, our system bridges Stage 1 and Stage 2, incorporating placement and directional verbs while maintaining some reliance on spoken language order.

- Reliance on spoken language order

- Contextual sign variants

- Inclusion of non-manual features (e.g., facial expressions and head movements)

- Requires manual quality checking for accuracy

Stage 2: Transitional. August 2025:

- Adoption of BSL grammar (e.g., Topic-Comment structure)

- Utilisation of placement and directional verbs for greater accuracy

- Integration of multi-channel signs to enhance fluidity

Stage 3: Advanced. December 2025

- Expressive signing that captures nuances and emotion

- Understanding and conveying paragraph-level context

- Accurate use of role-shift and facial expressions for clarity and emphasis

Our Commitment

Our aim is to advance through these stages collaboratively, continuously testing, learning, and improving. By sharing this journey, we invite the Deaf community and stakeholders to be part of the conversation, ensuring our solutions align with their needs and aspirations.