How does our AI technology work?

The use of AI to automatically translate from English to British Sign Language (BSL) is changing accessibility for the Deaf community. These are some of the cornerstones of our work this year:

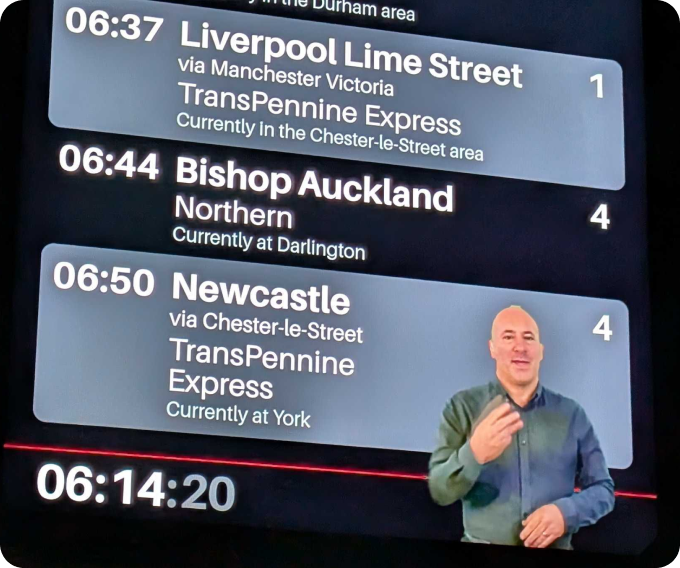

- Automatic BSL Translation: Our AI systems can translate written English into BSL rapidly and automatically. We use advanced generative AI technology to create photo-realistic sign language translations direct from written text. Our products started in the transport sector, providing live journey information in BSL and ASL. We are now moving towards open-ended translation in domains such as Video Translation and Website Translation.

- Sign Language Production: Signapse focuses on the generation of Sign Language (Text to Sign) rather than recognition (Sign to Text). This means we can concentrate our resources and expertise on creating high-quality, accurate sign language content. By specialising in sign production, we can ensure that our output meets the highest standards and effectively serves our target audience.

- SignStudio: Our Video Translation Platform can translate any video to sign language with our new web-based system, here. Our solution seamlessly integrates BSL translations into your videos using a picture-in-picture setup. Our AI translation is now live on SignStudio, and you can use this technology with our “Try with AI” feature. Our platform ensures quick turnaround times for videos at scale meaning your videos can reach a broad audience and be accessible by the Deaf Community.

- Signapse Hub: We have an internal dashboard where all our digital sign language content is managed, and your videos are processed. Using the technology described below, your videos get translated using AI, with multiple manual QC checks throughout the process. All the translations are reviewed by our Translation team and will not be released until they are checked by multiple Deaf native BSL users.

These advances bridge communication gaps and ensure that Deaf individuals have better access to information and services. It's an exciting time for AI in the realm of sign language.

How do we translate written and spoken words into sign language ?

Automatic Sign Language Translation using Generative AI involves creating continuous BSL sentences that make use of space and direction. Our system calculates the positioning and direction of signs to ensure the translation is both clear and understandable. This approach allows us to create videos and produce high-quality sign language content.

Automatic Sign Language Translation using Generative AI to enable Deaf accessibility

Signapse performs automatic Sign Language translation using two steps; 1) Text to Gloss and 2) Gloss to Video

1. Text to Gloss

Gloss

Our first step translates from Written Text (e.g. English) to Sign Language grammar and syntax (e.g. BSL). This step uses a written form of sign language grammar called Gloss, which lists the signs used in BSL order and utilises BSL linguistic constructs of Directional Verbs and Non-Manual Features. This means we can use standard NLP technology to translate from written Text to written Gloss.

An example translation of “What is your name?” is shown below, translating to “NAME YOU WHAT” in BSL gloss. This gloss sequence can then be generated as a BSL video in Step 2.

Large Language Models (LLMs)

To perform this translation, we use cutting edge Large Language Model (LLM) technology, made popular by ChatGPT. Having already seen a large amount of English text, if you show some gloss data to the LLM, it can learn the complex BSL structure and grammar. Signapse collects thousands of BSL gloss sentences from real BSL translations to use as training data for the LLM, teaching BSL word order and the use of Non-Manual Features (NMF) and Multi-Channel Signs (MCS). Once the LLM has been trained, it can automatically generate a Gloss sentence for a new English sentence, using the learnt BSL grammar to provide an accurate translation for this context.

Our translation process also contains BSL-specific linguistic elements:

- Sign Variants: In BSL, there are multiple ways to express the same concept depending on the context. For example, the sign for "MATCH" is different if referring to a football match or matching lottery numbers. Our technology uses the translation context to select the correct sign variant for this use case.

- Directional Verbs and Placement: In BSL, placement is a fundamental part to represent space and the relationships between multiple concepts. Our AI technology can place concepts in space and refer back to the contexts later, to enable clearer understanding of the translation. Once placements have been established, it also amends the directions of verbs to show which concept is performing the action and who is receiving it. For example, the sign "HELP" would move from the concept providing the help (e.g. LEFT) to the person receiving the help (e.g. YOU), with the direction highlighting the relationship.

- Non-Manual Features: NMFs are essential grammatical elements in BSL that use facial expressions, head movements and mouth patterns. These can modify the meaning of signs, show questions, indicate negation, or express emotion. For example, raising eyebrows often indicates a yes/no question, while furrowing them can indicate a "wh" question. Our gloss representation contains NMF flags, allowing the AI to generate a more nuanced translation.

A more nuanced translation of “What is your name?” could then be “NAME YOU^NMF WHAT@QUESTION”, utilising the NMF version of YOU and the Question variant of WHAT.

Once the Gloss sentence has been generated, it is passed onto step 2 to generate the BSL video. Another advantage of using Text to Gloss translation is the ability to manually check the generated gloss sentence, before being generated as a BSL video. All external translations are reviewed by our BSL Data Collection team at this stage.

2. Gloss to Video

Gloss sentences are a helpful representation, but need to be generated as a BSL video to be used. In order to generate a BSL video, we collect examples of each gloss, using a high-quality capture studio with ~30 cameras. Our Deaf BSL-native translators have recorded ~12,000 glosses, complete with Directional Verbs (~40 versions of each verb!) and Non-Manual Features.

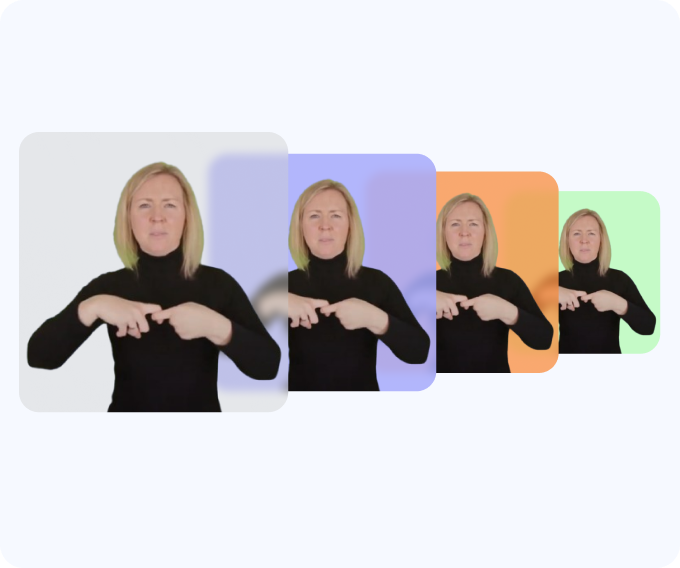

Our photo-realistic digital signer uses world-leading Computer Vision technology to generate a BSL video that is indistinguishable from a human signer. We produce sign language videos by blending between different glosses, with our digital signer calculating the positioning and direction of the glosses to ensure the video is smooth and understandable. This approach allows us to create seamless and realistic videos, converting diverse recordings into a consistent high-quality appearance.

You can see a BSL video of the above example using this approach.

We believe that BSL-users prefer to watch a realistic looking signer rather than a graphical avatar, as they are used to this appearance. We shall exclusively use native Deaf signers for our Digital Signer appearances, with expressed written consent and a remuneration scheme for use of appearance in commercial settings. We currently provide a single consistent appearance for our Digital Signer, with additional appearances available soon.

BSL Stages

We are proud of our AI BSL translation technology, but we know there are still a lot of improvements required. BSL is a rich visual language, with complex structures that can be hard to generate in 2D videos. For example, role shift is a subtle feature that is important for communication, but is difficult to generate smoothly in our current approach. Additionally, we are conscious that our current translations can have some reliance on the spoken language order, i.e. Sign Supported English (SSE), for more complex sentences.

Approximately a third of Signapse staff are BSL-users, we have a prominent Deaf Advisory Board and host regular Deaf User Groups. This helps us understand the limitations of our current technology and the required improvements to generate the highest quality BSL translations. However, we believe that our current AI can generate understandable BSL translations and has been evaluated positively by these groups. Additionally, a lot of clients want to provide Deaf accessibility now which the current solutions can’t meet and are ready for AI translation.

This is why we’d like to openly share our AI BSL translation journey and the current state of the technology; both features and limitations. We shall soon release a framework to understand the multiple stages of AI translation and required improvements, which can be used as an industry standard to communicate where the technology is at and the future potentials. There is no required threshold for a product to be released, but we believe this openness will provide valuable information and support to the Deaf community.

_______________________________________________________________________________________

Don't just imagine a more inclusive future—help create it. Our groundbreaking whitepaper reveals how AI is transforming accessibility with real-time sign language translation, bridging critical gaps, and empowering inclusivity across industries.

Explore powerful case studies, ethical innovations, and actionable solutions.

Download the Whitepaper Now and join the movement toward a world where communication knows no barriers!