What Makes an AI Sign Language Interpreter Fluent?

Sign languages such as British Sign Language (BSL) and American Sign Language (ASL) are rich, visual and deeply cultural languages. They rely on handshapes, movement, facial expressions, body posture and spatial grammar, features that make them uniquely expressive and also uniquely challenging for artificial intelligence to understand. As emerging AI models attempt to interpret sign language in real time, many people ask: “What would it mean for an AI sign language interpreter to be fluent?”.

To answer this, we need to compare how humans learn sign language, how AI learns and how both approaches intersect. This blog explores the nature of fluency in BSL and ASL, what it takes for humans and machines to acquire it, and the future of AI-powered sign language translation.

- Human Fluency vs Machine Fluency: What Does It Look Like?

- How to Become an ASL and BSL Interpreter?

- How Long Does It Take to Become Fluent in ASL and BSL?

- Can AI Learn Sign Language?

- What Makes an AI Sign Language Interpreter Fluent?

- How SignStream and SignStudio Work

- So, What Does the Future Look Like for AI Sign Language Translators?

- How Signapse are a Leader in Sign Language AI

Human Fluency vs Machine Fluency: What Does It Look Like?

When people talk about becoming “fluent” in a spoken or signed language, they often mean the ability to understand and express ideas naturally, accurately and appropriately. Human fluency involves cultural awareness, emotional nuance and the skill of reading context.

But for AI, fluency is something different. It’s built on:

- The size and quality of training data

- The accuracy of hand, face and body tracking

- The sophistication of its language models

- The ability to interpret movement, emotion and context

- Continuous learning through feedback loops

AI fluency is measured in precision, recall and real-time processing speed. However, these metrics don’t fully capture the cultural intelligence and context sensitivity that human interpreters bring.

To understand why AI often struggles with sign language, it helps to know how humans become interpreters in the first place.

How to Become an ASL and BSL Interpreter?

Becoming a certified sign language interpreter requires years of training and deep cultural understanding. The path varies by country, but generally includes:

1. Learning the Language Deeply

Interpretation requires far more than conversational skills. Trainees must learn:

- Grammar and syntax

- Classifiers

- Fingerspelling

- Non-manual signals (facial expressions, mouth patterns)

- Regional variations and dialects

- Deaf culture and etiquette

Interpreting is not simply “translating signs into English”, it’s transferring meaning between two distinct languages.

2. Formal Education and Qualifications

For BSL in the UK, the path typically involves:

- BSL Level 1-6 qualifications

- A Level 6 Diploma in Sign Language Interpreting or a MA in Interpreting

- Register as a Trainee Sign Language Interpreter with NRCPD (National Registers of Communication Professionals working with Deaf and Deafblind People)

- Qualify and Register as an Registered Sign Language Interpreter with NRCPD

For ASL interpreters in the US, qualifications typically include:

- A two- or four-year degree in ASL interpreting

- Accredited training programmes

- Mentorship from certified interpreters

- Passing the NIC (National Interpreter Certification) exams

3. Mastering Interpreting Modes

Interpreters must be able to perform:

- Simultaneous interpreting - interpreting in real time

- Consecutive interpreting - interpreting segment by segment

- Video remote interpreting (VRI) - interpreting via video calls

- Specialised terminology - legal, medical, educational, business

These modes demand rapid mental processing and deep language fluency.

4. Cultural Competence

Interpreting is not purely linguistic. Interpreting must understand:

- Deaf cultural values

- Historical context

- Social norms

- Communication preferences

It is cultural fluency that AI systems currently lack the most.

How Long Does It Take to Become Fluent in ASL and BSL?

Human fluency varies, but most learners need 3-7 years to achieve advanced proficiency, and 5-10 years to become professionally competent interpreters.

Why does it take this long?

- Sign languages are not based on English grammar.

- Facial expressions are integral to grammar.

- Spatial referencing is unlike anything in spoken language.

- Nuanced meaning depends on movement quality and intensity.

Unlike spoken language, you can’t rely on written practice. Everything is visual, spatial and dynamic.

People who grow up in Deaf families (CODAs) often achieve fluency quickly, but for second-language learners, mastery takes significant time and immersion.

AI faces many of the same challenges, but with additional barriers.

Can AI Learn Sign Language?

Yes, AI can learn sign language. But, partial fluency is very different from complete fluency.

AI can learn components of sign language, including:

- Handshape recognition: Computer vision has become increasingly capable of recognising handshapes using 2D and 3D data.,

- Movement tracking: Deep learning models can follow trajectories of hands, arms and upper body.

- Facial expression analysis: AI can detect eyebrow movement, eye gaze and mouth patterns, all crucial for grammar.

- Gesture classification: AI can match patterns in video to known signs.

However, this only covers part of what BSL and ASL is.

Where AI Experiences Challenges

AI still has difficulty with:

- Speed variation: Some signers sign extremely fast.

- Individual styles: No two signers sign exactly alike.

- Regional dialects: Signs differ by region and community.

- Context-dependent grammar: Meaning changes with spatial placement.

- Classifiers: Highly visual, descriptive signs that vary by context.

- Continuous signing: Unlike isolated signs, fluent signing blends gestures.

- Real-world environments: Poor lighting or angles can confuse models.

- Cultural nuance: AI does not inherently understand Deaf culture.

This means AI models may recognise words but misinterpret meaning similar to a hearing learner who knows vocabulary but lacks true fluency.

What Makes an AI Sign Language Interpreter Fluent?

True AI fluency would require mastery across several levels.

1. High accuracy in recognising continuous signing

Not just individual signs, but full sentences with natural flow.

2. Understanding grammar and spatial structures

AI must comprehend:

- The use of signing space

- Directional verbs

- Classifiers

- Role shifting

- Non-manual grammar markers

This requires models trained on full linguistic datasets, not just word-level signs.

3. Cultural and contextual awareness

A fluent interpreter (human or AI) must interpret meaning, not just motion. For example:

- Sarcasm

- Emotional tone

- Code-switching

- Contextual references

Without these, interpretation becomes literal and inaccurate.

4. Ability to generalise across signers

AI must handle:

- Different body types

- Signing speeds

- Regional dialects

- Unique personal styles

This requires large, diverse datasets, many of which do not yet exist!

5. Real-time processing

AI translation must be fast enough for real conversations, ideally under 200 milliseconds of latency.

Only when all these criteria are met can we describe AI as “fluent”.

How SignStream and SignStudio Work

At Signapse, we are working on creating an AI sign language translator that not only captures the essence of sign language, but also best represents the Deaf community. We are on the journey of building a large dataset that our AI works from. By collaborating with the Deaf community, we have incorporated the essentials of sign language. From its distinct grammar to facial expressions and spatial awareness, our AI is constantly being developed so that it produces accurate translations and will eventually achieve fluency.

What Training Data Does AI Need to Learn a Sign Language?

Unlike spoken languages, sign languages do not have vast text. AI must learn from video, which is harder to collect, annotate and process.

A robust dataset requires:

- Thousands of hours of diverse signers

- Multiple camera angles

- Occlusion cases (hands crossing in front of the body)

- Varied lighting and backgrounds

- Annotation by Deaf experts

- Semantic labels, not just word labels

- Non-manual signals annotated separately

Want to know our process? Learn more in this deep dive into our AI technology.

Ethical and Community Considerations

We understand many people are worried about the use of AI. But, we are here to clear the air about how we are utilising AI technology to create our products. We work collaboratively with the Deaf community to ensure our datasets are as accurate as possible. This includes looking at markers such as correct use of sign language grammar, spatial awareness and non-manual features. By examining our digital signers against the Sign Language Production Framework (SLPF), we are continuing to build an AI signer that is authentic and best represents the Deaf community.

For more information on how we are doing this, read about our journey so far in our AI Roadmap article.

So, What Does the Future Look Like for AI Sign Language Translators?

To remain competitive, many organisations are adopting AI into their processes and accessibility tools are no different. We believe the future of AI sign language translation is a hybrid model.

Over the next 3-5 years, we will see major improvements in computer vision accuracy, especially in handshape recognition, facial expression tracking and continuous-sign understanding. This will allow for our digital signers to be at a higher quality visually, making signs clearer and facial expressions more obvious.

Between 5-10 years, AI is expected to shift from basic gesture recognition towards true linguistic understanding. The main challenge holding AI back from achieving “fluency” at the moment is the lack of data. As we build on this dataset over the next decade, we will be able to not only capture basic signs but also the emotion, meaning and more that comes with it. This foundation also allows us to create other sign languages in AI in the future!

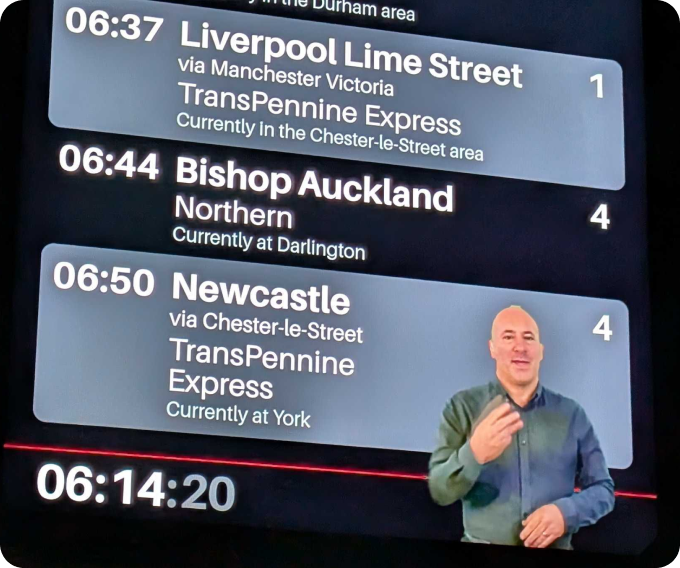

The Hybrid Model

Even with these advances, AI will still not replace certified human interpreters, especially in medical, legal or high-stakes environments. Instead, the next decade will establish AI as a valuable accessibility companion. AI sign language translators will be used for casual and predictable contexts to improve accessibility in public spaces. We expect to see digital signers being integrated across more transport networks, customer service environments and marketing content.

[link to whitepaper when live]

How Signapse are a Leader in Sign Language AI

AI is rapidly improving in its ability to translate sign language, but true fluency is a complex and multifaceted goal. Sign language is more than hand movements; it is a cultural, expressive, visual and spatial language. For AI to become “fluent”, it must understand meaning, not just motion.

Humans take years to become interpreters. AI will follow this path, requiring vast data, improved models, cultural awareness and careful collaboration with the Deaf community.

At Signapse, we are making strides towards sign language technology that reflects this future. Working in collaboration with the Deaf community, we are not just recreating signs with our digital signers. We ensure to emphasise facial expressions, spatial awareness and contextual cues to create the most accurate representation of sign language as possible.

Follow us on our socials or try SignStream for free to see our technology in action!

FAQs

Will AI eventually replace human sign language interpreters?

We think this is unlikely! Interpreting requires cultural judgement, empathy and contextual understanding that AI cannot fully replicate. AI will assist with everyday communication but not replace professionals in sensitive settings.

Why is sign language harder for AI to learn than spoken languages?

Sign languages depend on body movement, facial expressions and spatial grammar, which are all harder for AI to analyse than audio signals. They also lack large annotated datasets that spoken languages benefit from. At Signapse, we are at the start of creating these databases for future generations of sign language translation products.

Can AI help Deaf people right now?

Yes, and you can see it for yourself too! We have implemented our technology across transport hubs, making the travel experience more friendly and accessible. You can see our digital signers in train stations, airports and more.

Related Articles

The Ethics of AI in Accessibility: Where Do We Draw the Line?

What is our planned approach to the grammar of sign language?

Signapse's Sign Language Translation using Generative AI: Achieving Photo-Realism and Accuracy